What do rationalists spend money on?

Analyzing the 2025 ACX grants

Scott Alexander just gave out over $1.6 million in donations to projects. Who and what is he giving that money to? Let’s look into it.

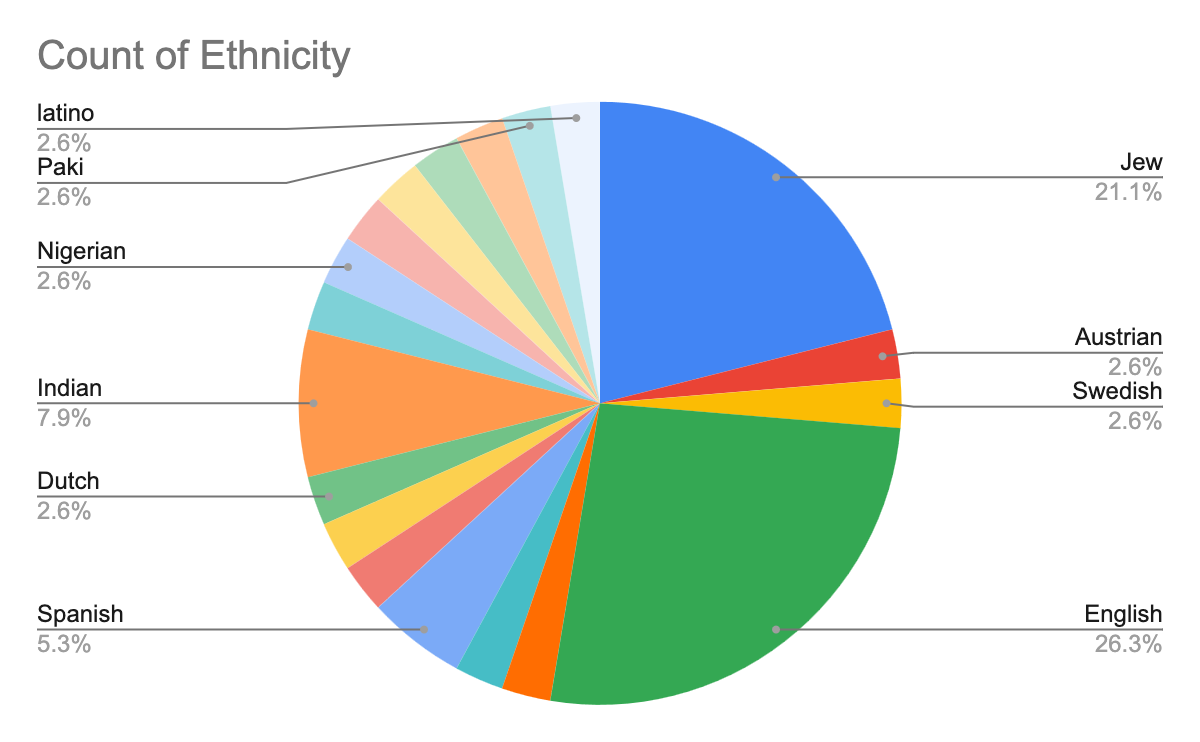

I analyzed the ethnicity of the recipients as well as the project types in this spreadsheet here. As you can see in the pie chart above, the English are really punching above their weight. Where are all the Germans? Basically all of these English people were in the UK and the USA, which houses about 200 million Anglos collectively. Germany, Austria, and Switzerland collectively have about 95 million German speakers. If you throw in French speakers in the low countries, Switzerland, and France, you get 170 million French or German speaking Hajnaloids.

So these people got wrecked even though only 20% of the prizes went to legacy Americans. Maybe it would pay for them to invest in better internet infrastructure (ich schaue die Deutschen an) and English speaking skills (je regarde les Français). Or maybe the English are uniquely smart. Or maybe they’re uniquely terrible.

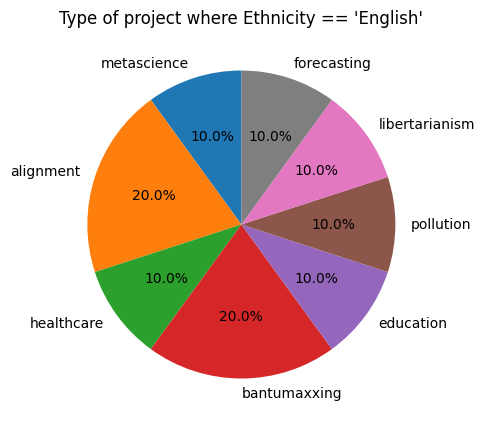

There would have been no fair-skinned bantu-maxxers in this funding round if it weren’t for Angloids!

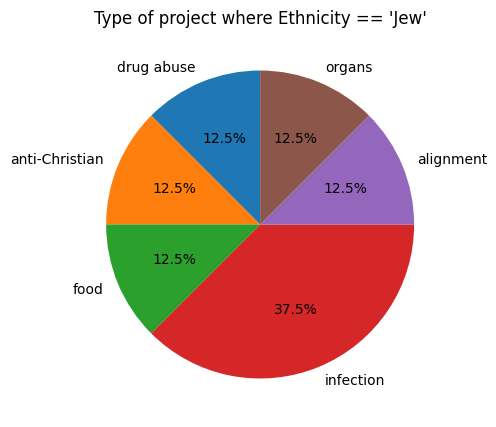

In contrast, Jews are really concerned with reducing infection. Maybe their increased neuroticism?

When Jews colonize a place, they actually get rid of the locals. When Angloids colonize a place, they create more locals, then get pushed out, then say sorry, then import hundreds of millions of locals into their own countries! Angloids are epic bantumaxxers.

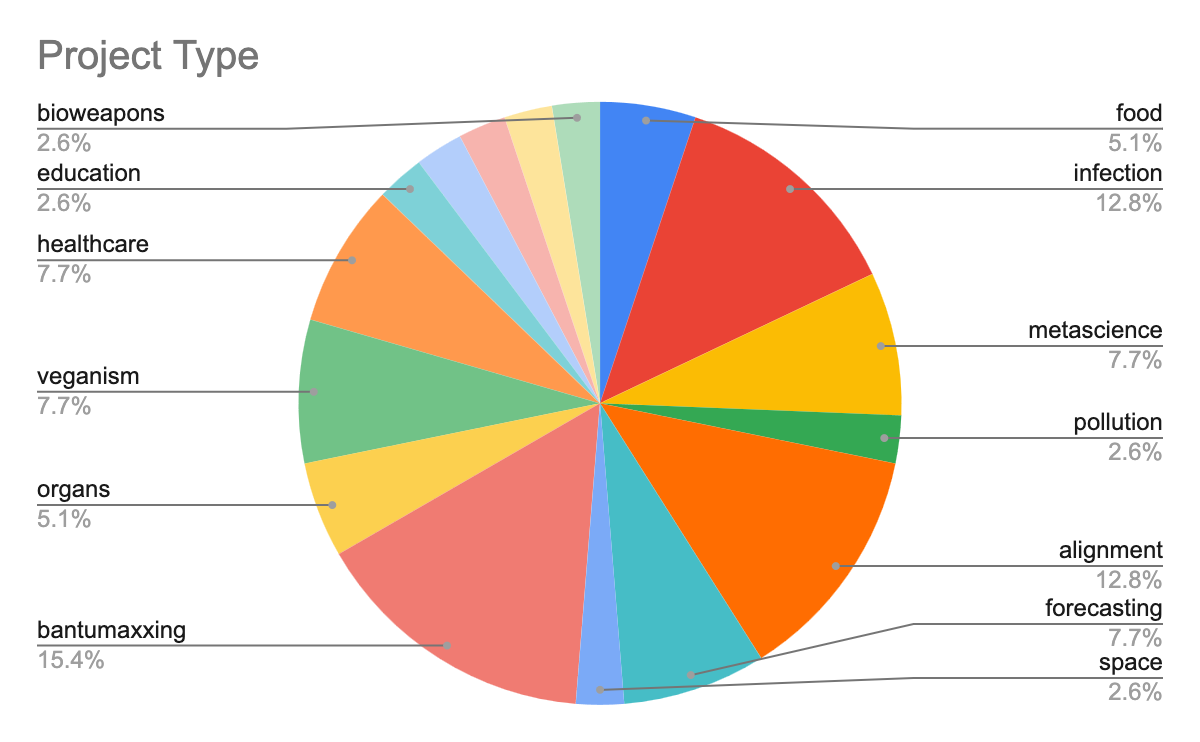

Anyway, enough on ethnicity. Here’s what’s being funded. I’d say at least 35% of the projects are a waste of time. That is, bantumaxxing, veganism, and alignment. Let me explain why.

The donations to bantumaxxing and veganism are motivated by a naive utlitarianism. It goes something like this: there are feel good qualia, and there are bad feel qualia. Even some of the very lowest of animals have qualia. Qualia apparently stops around shrimp because we got a shrimp welfare project, but at the same time there’s a screw-worm genocide project. So I guess screw-worms don’t have bad-feel, or they don’t matter because they’re hecking bad.

The hypocritical, vibes-based way in which badfeel and goodfeel is ranked and evaluated in different species aside, this moral system is deeply unwise. There’s a basic moral lesson taught to children by the Bible, Greek fables, and so on, that delayed gratification is good. Scott Alexander’s utilitarianism is a fancy, altruistic looking failure of the marshmallow test. It rejects a basic moral axiom of higher man, that goodfeel is not good and badfeel is not bad. Goodfeel and badfeel are a morality compass for criminals and other low types of men, little children, and animals. Higher men are beyond pleasure and pain.

Rather, good things include beauty, strength, virtue, and God. Not all populations of replicators possess these things in equal abundance. What’s more beautiful and virtuous, a world where we promote the reproduction of people with the highest civic worth and help subsaharan Africa maintain their population at something like 10 million bantus total, or a world where we ignore the reproduction of high civic worth people and create 2 billion subsaharan Africans? Obviously the former is better, but Scott Alexander funds the latter.

The same goes to veganism. Even Hitler was sympathetic to animal welfare. But would he have sacrificed his Reich for it? No. Veganism is currently a opportunity cost which distracts from eugenics. The horrors of factory farms are primarily driven by maximizing low quality human life. There are way too many low IQ people who basically just eat, work, shit, fuck, sleep, and repeat. They all want to eat factory farmed torture food because they have no empathy. Their cognitive skills are not refined enough to have the level of empathy Scott Alexander knows to be beautiful. If you fund eugenics, the veganism issue will solve itself. First the impetus for factory farming will disappear. Then the new geniuses of the Earth will invent new vegan food production methods, like lab-grown meat that is better than natural grassfed meat.

Now for alignment, this is less of an ethical issue and more of a reality check issue. Scott Alexander’s views on AI are delusional. That’s probably why he wasted over $160,000 on alignment. It could have been worse. It probably should have been worse. Maybe his priors are updating in a more realistic direction. He only threw $5k at a really delulu project, one about feeding an LLM stories to prevent a grey goo apocalypse (lol). The rest were more down to Earth political schemes. One was trying to make sure GPT 7 won’t have normal academic researcher biases (these are leftist, so if they’re good at this it means they’ll make an auto-research with no anti-hereditarian bias, which is good). A couple were about influencing governments to take action on alignment. I still think these are pretty silly. It looks to me like the rate of LLM improvement is diminishing rather rapidly. GPT 5 was a major let-down compared to what Bay Area AI Messiah people were predicting. It basically proved their faith in AI is fake.

If you were born in 1950, you basically grow old watching the first computers turn into HD streaming machines and become integrated into almost all facets of life. But it really did take 75 years. I think with AI we’re going to see major shake-ups in entertainment, education, remote medicine, and some white-collar jobs over the course of the next 25 years. We’ll also probably get self-driving cars within this time. I actually don’t think we’ll see it do much original research during this time. In the 25 years after that, maybe it will start to be able to do some types of iterative research autonomously with minimum error. By that time, I’ll be an old man. I doubt it will extend my life much.

So what kind of future do we want for ourselves? Underwhelming AI? Maybe around my expected death date it will finally start to produce a little bit of research humans can’t understand? Is that good or bad? Shouldn’t we try to keep up with AI? If we do eugenics alongside AI, we’ll accelerate the rate of AI development while minimizing its ability to outpace human intelligence.

Right now they’re trying to align a plagiarism machine with an underlying IQ of an ant. Waste of time, you could be advocating for increasing the mean IQ this generation by 20 points, if only you were brave enough.

The rest of the categories are potentially weakly eugenic. Meta-science is probably the best. After that I’m partial to work on infection, although I don’t know what that will do to selection pressures on immune systems and against mutations. It could be dysgenic, although a lot of infections humans have now are just annoying but kill almost no one. Pollution, space, and bioweapons are good, I suppose, because a ruined Earth with no humans on it can’t be very eugenic. Forecasting seems silly but it might be money for above-average IQ people.

The education category isn’t terrible, it’s money for acceleration. This could be in favor of eugenics depending on how it’s done. I’m planning on writing an article on this soon, so subscribe if you don’t want to miss it:

1. What is the "anti-Christian" charity exactly? I haven't been able to identify it in the list.

2. In all nations, everywhere, the advancements of modernity (better education, healthcare, lower rate of child death, increases in longevity) have universally translated to lower fertility. This is true in Africa as well: fertility, while high, is decreasing basically everywhere. EA charities which help with Africa's development in this manner are thus leading to a long-term decrease in the number of African people. While there will be a temporary increase (those who would have otherwise died do not), the new general conditions come about quicker and decrease fertility sooner. Saying that these charities "create" africans is wrong.

3. No EA I have seen is a simple hedonic utilitarian. They care about all of the higher spects of living you care about - they all fall under "well-being" and the ability of humans to pursue higher goals. It just so happens that it is much harder to be beautiful, strong, virtuous and faithful when you are malnourished, diseased, or dead. These are the obvious first things to fix, to bring humans who need it on a better baseline from which they can follow other pursuits (or even if they do not - while not dignified, a person who feels base pleasures at a consistent rate is better than someone miserable or dead, if they do not cause harm). Also, only a few select monks and mystics are beyond pleasure and pain - you are not, I am not, an overwhelming majority of us are not. And that is fine - we can enjoy life and try not to suffer from it (EDIT: while still striving for more meaningful aspects of life, which give us pleasure. You seem to use the word "pleasure" to strictly refer to hedonic enjoynment, and not aesthetic appreciation or religious belonging or the satisfaction of doing something meaningful for humanity and the world etc. All of these fall under "pleasure", and because you do not properly define a lot of terms I do not know if I am correct or not in my interpretation.) If we have a basis for this, we will still have those monks and mystics to serve as examples and paragons. Taking forced misery out of the world will not change that.

4. Shrimps have different, more complex nervous systems than worms. There are stronger indicators of them being in pain than worms. This is not an arbitrary distinction (EDIT: I have found a detailed, multi-blog post discussions about the very topic of what constitutes pain in animals, how it can be recognized, what features of nervous systems indicate pain etc., in invertebrates less complex than shrimp: https://forum.effectivealtruism.org/s/sHWwN8XydhXyAnxFs - point is, EAs as a collective think deeply and scrupulously about this stuff, and would give you answers were you to bother checking or asking them). Furthermore, a worm causes infinitely more pain to a human than a shrimp can do. Even if the worm was similar in its experience of qualia, the harm it would do to humans would likely outweigh its own pain. You treat EA arguments with incredible facility, and have not asked them any of your (fair) questions - you just assume their answers are obviously wrong and stupid.

5. Lower IQ people do still have empathy (lesser than high IQ people, true). Everyone, to a large extent, wants factory farming because it is a cheap source of good food, and a higher percentage of geniuses will only partially alleviate that. Most reasons for opposing veganism that are not rational/philosophical (gut reactions, basically) happen across the board: scope insensitivity, being scared of being confronted with the possibility of having done great harm, easiness etc. Also, the work low-IQ people do, which you gloss over, is the reason economies of scale and all their wonders you enjoy can exist, and also why you have food to eat and heat to not die of cold (besides the research and ingenuity of high IQ people, of course). There is a point where you cannot automate everyone out of existence.

6. You dismiss all concerns of alignment people out of hand: the fact that their arguments can work without sci-fi grey goo (which only really Eliezer cares about anymore), the fact that current LLM trajectories do change forecasts and timelines, that we cannot tell currently if this peak/dip is truly the beginning of a new winter, that alignment concerns are more general beyond LLMs and that it is better to be prepared in all cases etc. Also, using terms like "Messiah" and "faith" is very strange since alignment people do not want AGI to happen as it is now, as they believe it will be misaligned and cause chaos. They are not waiting for it - many would be extremely relieved at a new winter and a longer timeline.

7. If AI will produce "a bit of research humans cannot understand", then it has basically hit human intelligence, and if it has hit human intelligence, there are essentially only computational barriers for it to become super-intelligent. You seem to think this is a large gap - do you have a specific reason for this? Most discussion (which I agree with) argues the opposite.

Regarding the funding analysis presented, I wonder how the ethnic categorisations truly serve an equitible distribution goal. It reminds me of balancing biases in AI datasets, a complex undertaking.